Teamwork makes the dream work, but as a software developer you might wish it were easier to ignore. If users can create things with your app, pretty soon they’ll want to collaborate with others, and you can’t just tweak your authorization policies and cross your fingers. If two people are making changes at the same time, the second to save will overwrite the first’s work without even knowing. And losing your users’ data is the absolute worst.

I recently shipped tools for authors on Gala, my team’s platform for multimedia case studies, and the question of collaboration was one of the most foreboding hurdles to clear. Telling users “sorry, that’s out of scope” wasn’t an option, since we preach that teams of students, professors, and practitioners working closely together produce the best case studies. Even still, I had a few candidate designs to choose between.

Different Options for Concurrency Control

Optimistic Locking

The simplest option that could possibly work without resulting in data loss is optimistic locking. In this strategy, we ask clients to identify their version of the document (like with a timestamp) when submitting their changes to it. When it receives an submission, the server checks that the document hasn’t been modified by someone else in the meantime. If it has — that is, if the user’s timestamp doesn’t match the server’s — the server rejects the changes. It’s called “optimistic” because the system assumes there won’t be a conflict, and only does anything to deal with the possibility of data loss once it’s certain to be needed.

From a back-end perspective, this is the simplest — but that’s only because it pushes all the complexity on to the user. Your change has been rejected because someone else beat you to the punch. Submit it again when you’re up to date. In a programming context, this is called a merge conflict; you may be feeling anxious all of a sudden. I can’t ask my users to deal with the merge conflict manually, and giving them the tools to do so (adding a diff interface?) would be a huge pain.

So what about automatically resolving these merge conflicts? Simply transform your record of your user’s unsaved changes (you’re keeping a record of edit operations right?) to take into consideration the other user’s changes. Then you just replay the transformed changes starting from the new version.

Simply. Just. Well, that’s the gold standard solution — that’s what Google Docs does to enable truly real-time collaborative editing. (And if you’re interested in learning more about how that kind of thing works, let me recommend this excellent talk given by Justin Weiss at RailsConf 2018.)

The catch, however, is that the transformation logic grows in complexity with how many kinds of operations you allow your users to perform.

With plain unstructured text, you’re only looking at insert_characters and remove_characters, so it’s pretty manageable.

But a case study on Gala consists of Page and Card objects with highlighted spans corresponding to attached Edgenote objects (embedded media elements).

It’s very rich, very structured text. Oops?

Pessimistic Locking

So I could convert my editing system to use delta encoding, add the concept of versions to my models, and spend a very long time writing (unavoidably buggy) “rebase” logic for every pair of operations... Oh, and we need it mostly ready by next week. There must be another way.

We can accept a little more complexity in the system for decreased complexity in the client logic. If we stop a user from making changes until the first user has finished, then we’ll never have any merge conflicts to deal with. This is called pessimistic locking, since every edit begins with the assumption that someone will come along and make a conflicting edit. Before you start changing, you “take a lock” on the object you’re changing. While you’re editing, anyone else who tries to take their own lock will be refused. When you save your changes, you delete the lock and the next user can go ahead.

The tradeoff to pessimistic locking is efficiency: sometimes your users will have to wait. This is really frustrating if, from the user’s perspective, they’re trying to edit a different part of the document that’s locked.

While the structured nature of Gala’s documents was an obstacle for an optimistic strategy, it’s a boon for a pessimistic one. Our locks can be scoped to a specific card or a specific Edgenote, and it’s much less likely to get in someone’s way. And if we expose the information about who else is editing the document, then collaborators can work it out between themselves (and it’s not the concern of my app!)

So that’s what I did.

- I added a Lock model belonging to lockable models, and rejected changes to those models by users other than the one holding the lock.

- Then I created a wrapper component in React which managed taking, giving back, overriding, and indicating the presence of the locks.

- Lastly, I set up a WebSocket connection to broadcast the creating and deletion of those locks, and the changes made to the underlying models, since none of this matters unless other users can see that the locks exist.

Implementing Locks in the Rails Backend

A Lock is a very simple model.

It belongs to a user and belongs polymorphically to a lockable model, which means that the model on the other side could be any type.

The only thing I’m doing differently is that I’m also recording the lockable model’s case study so that we can quickly query for the active locks when loading a case.

class Lock < ApplicationRecord

belongs_to :lockable, polymorphic: true

belongs_to :user

belongs_to :case_study, inverse_of: :active_locks

before_validation :set_case_study_from_lockable

private

def set_case_study_from_lockable

self.case_study = lockable.case_study

end

endLockable

Now, I want to have a nice clean API for all my lockable models, so I’ll make a module Lockable and include that wherever it’s needed.

This module adds the reverse association, and adds some methods that make using locks nicer.

Credit where it’s due: this module was based on one from DHH’s screencast about something completely different in which it appeared as he was walking through the Basecamp codebase.

(Have you seen those screencasts? They’re good.)

module Lockable

extend ActiveSupport::Concern

included do

has_one :lock, as: :lockable, dependent: :destroy

end

def lock_by(user)

create_lock! user: user

rescue ActiveRecord::RecordNotUnique

nil

end

def locked?

lock.present?

end

def locked_by?(user)

locked? && lock.user == user

end

def unlock

update! lock: nil

end

endI am relying on a unique index on lockable_id in the database to prevent two people from having a lock on the same thing — that’s why lock_by has to handle the RecordNotUnique exception.

Verifying Locks

Then I made a controller concern to add a method verify_lock_on which I want to use as a before_action filter in my lockables’ controllers.

module VerifyLock

extend ActiveSupport::Concern

private

def verify_lock_on(model)

return if !model.locked? || model.locked_by?(current_user)

render json: model, status: :locked

end

end

# Which is used like...

class EdgenotesController < ApplicationController

include VerifyLock

before_action -> { verify_lock_on @edgenote }, only: %i[update destroy]

# ...Careful readers may notice that I’m not requiring that a user updating a model have a lock on it themselves, only that nobody else does. There are a number of changes my users can make by pressing a single button and I didn’t think it made sense for those to require three API calls. Instead, I’m offering a pessimistic lock as an option to the client when the user is starting to make a longer change.

Managing Locks

Taking Out a Lock

Now, we need an API for the client to create locks when users start edits and to destroy them when the user is finished. You can create a lock with a POST request

POST /locks HTTP/1.1

Content-Type: application/json

{ "lock": { "lockable_type": "Card", "lockable_param": 1 } }Which is handled by the controller

class LocksController < ApplicationController

before_action :set_lockable, only: %i[create]

def create

authorize @lockable, :update? # Using pundit for authorization

@lock = @lockable.lock_by current_user

if @lock.present?

render json: @lock, status: :created

else

render json: @lockable.lock, status: :conflict

end

end

private

def set_lockable

klass = params[:lock][:lockable_type].constantize

@lockable = klass.find params[:lock][:lockable_param]

rescue ActiveRecord::RecordNotFound, NameError

head :unprocessable_entity

end

endAnd returns a lock object like this if it was successful.

HTTP/1.1 201 Created

Content-Type: application/json

{

"type": "Lock",

"table": "locks",

"param": "1",

"createdAt": "2018-07-03T16:22:41.746Z",

"lockable": {

"type": "Card",

"table": "cards",

"param": "1"

},

"user": { ... }

}If another user already has a lock, we respond with the other lock in the same format and the status code 409 Conflict

We’ll send this same lock object to the other users editing the case, so we’re including information about the user who owns the lock so it can be displayed.

Unlocking the Model After Submitting Edits

When the user has finished and submitted their changes, the client sends this

DELETE /locks/1 HTTP/1.1Which is handled like this

class LocksController < ApplicationController

before_action :set_lock, only: %i[destroy]

def destroy

authorize @lock.lockable, :update?

@lock.lockable.unlock

head :no_content

end

private

def set_lock

@lock = Lock.find params[:id]

end

endI’ll make a quick note about the authorization logic here: I’m not requiring the user to be the owner of the lock in order to delete it. I’m only requiring that they are allowed to edit the locked model. Otherwise, if something went wrong and a user failed to unlock a model and walked away from their computer, it would be quite a pain for everyone else. This way, a user who can edit the case can also make the judgement call that after three hours so-and-so is probably not still working on that card.

OK, that’s the server side component of this pessimistic locking system. Let’s recap.

I added a locks table to the database, and a corresponding model object.

Locks belong to anything that satisfies the Lockable interface, and that relationship is how we check whether a user can edit a model or whether someone else has an exclusive lock on it.

And then we added a new controller for a few lock endpoints so users can create and destroy locks on the elements they’ll be editing.

Creating, Displaying, and Removing Locks with a Reusable Wrapper Component

With the back-end for pessimistic locking ready, we have to add front-end code everywhere that the user can edit anything. We need:

- to create a lock when the user begins editing

- to remove the lock when they finish — after their changes are saved

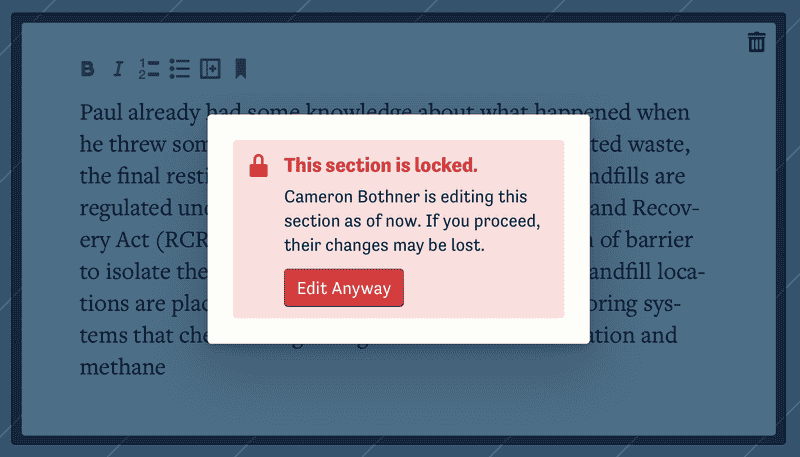

- to disable and overlay editable components with a notice when they are locked

- to provide a button that lets the user override the lock.

At one time, this would have been a truly intimidating task. With React, we can add this functionality in a truly reusable way.

Let’s start with the punchline — the API we want to end up with — to make it clear why I took the approach that I did. Suppose we have this:

<CardEditor value={contents} onChange={handleChange} />We want to end up with this:

<Lock type="Card" param="1">

{({ onBeginEditing, onFinishEditing }) => (

<CardEditor

value={contents}

onChange={handleChange}

onFocus={onBeginEditing}

onBlur={onFinishEditing}

/>

)}

</Lock>That is not too bad if that’s all the boilerplate that needs to be repeated throughout the codebase. I think it’s the least we can get away with: tell me what model we’re dealing with, and tell me when the user starts and stops editing.

That component uses the render props pattern, which means it expects its child to be a function that it will call with, in this case, two handler functions.

This is how that looks from inside of <Lock>.

function Lock({

children,

lock,

locked,

onBeginEditing,

onEditAnyway,

onFinishEditing,

visible,

}) {

return (

<>

{children({ locked, onBeginEditing, onFinishEditing })}

{visible && locked && (

<LockDetails lock={lock} onEditAnyway={onEditAnyway} />

)}

</>

);

}The call to children is what renders the <CardEditor> from before, and then if the component is locked and the lock should be visible (i. e. the user is in edit mode) then it renders <LockDetails> right after it.

<LockDetails> is a absolute-positioned component that covers children with an overlay and tells the user who locked it and when.

It also presents an override button, just in case.

<Lock> is a simple presentation component; all the props and callbacks it passes to its children come from the Redux store and action creators.

In implementing this feature, I added an attribute to my Redux store to keep track of active locks.

It’s a key/value store that I index into with the type and param attributes the <Lock> consumer provides.

function mapStateToProps({ user, edit, locks }, { type, param }) {

const lock = locks[`${type}/${param}`];

return {

lock,

locked: lock && lock.user.param !== user.param,

visible: edit.inProgress,

};

}The callbacks are straightforward passthroughs to Redux action creators.

function mapDispatchToProps(dispatch, { type, param }) {

return {

onBeginEditing: () => dispatch(createLock(type, param)),

onEditAnyway: () => dispatch(deleteLock(type, param)),

onFinishEditing: () => dispatch(enqueueLockForDeletion(type, param)),

};

}Redux Action Creators Make the Requests and Keep Track of What is Locked

When the user starts editing, I call onBeginEditing and create a lock.

But when they finish I don’t delete the lock right away.

The user’s changes will not be saved immediately after the user’s focus leaves the particular editable element — I’m batching the changes to autosave every five seconds or when the user clicks “Save”.

So onFinishEditing enqueues the lock for deletion after those changes are saved.

That queue is stored in the Redux store as a simple array.

But there is a circumstance when we want to delete the lock right away: when the user is overriding someone else’s lock.

That’s why onEditAnyway dispatches deleteLock directly.

Let’s look at the Redux logic in more detail, starting with lock creation.

createLock posts a request to the endpoint we defined earlier except in one special case.

If the user is starting to edit an element they just finished editing, and we haven’t gotten around to deleting their lock yet, we just remove that lock from the deletion queue instead of trying to make a new one.

export function createLock(type, param) {

return (dispatch, getState) => {

const extantLock = getState().locks[`${type}/${param}`];

if (extantLock) {

dispatch(removeLockFromDeletionQueue(type, param));

return;

}

API.post(`locks`, {

lock: { lockableType: type, lockableParam: param },

}).then((lock) => dispatch(addLock(lock)));

};

}enqueueLockForDeletion is straightforward.

After a little sanity check to make sure the user actually has the lock in question, we dispatch to add it to the queue.

export function enqueueLockForDeletion(type, param) {

return (dispatch, getState) => {

const { locks, user } = getState();

const gid = `${type}/${param}`;

if (locks[gid] && locks[gid].user.param === `${user.id}`) {

dispatch(addLockToDeletionQueue(gid));

}

};

}After the user’s changes are saved — or if they override someone else’s lock — deleteLock does what its name suggests.

export function deleteLock(type, param) {

return (dispatch, getState) => {

const lock = getState().locks[`${type}/${param}`];

if (!lock) return;

const { param: lockParam } = lock;

API.delete(`locks/${lockParam}`).then(() => {

dispatch(removeLock(lockParam));

dispatch(removeLockFromDeletionQueue(type, param));

});

};

}So that’s the front-end. There’s one Lock component that wraps anything editable and provides callbacks for when users begin and end editing Those callbacks call Redux action creators where we hit the back-end API. And we keep track of lock state in the Redux store using a map of type and param to locks and a queue of locks to be deleted after the next save.

Broadcasting Updates to All Clients Using ActionCable

That’s all the infrastructure we need for one client to use locks, but they’re no good if other authors don’t know that an element is locked and can’t see the changes after they’re saved. We need to stream each user’s edits and locks to every other user, and luckily Rails provides a simple WebSockets solution called ActionCable.

ActionCable keeps track of what messages should be broadcast to whom with Channel objects.

I want to broadcast editing actions with one channel per case study, so I created an EditsChannel like this.

class EditsChannel < ApplicationCable::Channel

def subscribed

stream_for case_study if user_can_read_case?

end

private

def user_can_read_case?

Pundit.policy(current_reader, case_study).show?

end

def case_study

CaseStudy.friendly.find params[:case_slug]

rescue ActiveRecord::RecordNotFound

reject

end

endWith that channel, ActionCable handles the boilerplate of turning a call like

EditsChannel.broadcast_to slug, datainto a WebSocket frame containing data that every client subscribed to that case study’s channel receives.

All I have to do is call broadcast_to after handling every meaningful edit.

Triggering the Broadcast After Edits

My first thought was to use an ActiveRecord callback like after_save.

Some developers argue that callbacks should never be used since the side effects they entail are hard to reason about.

But I think there are times that callbacks are exactly the right tool for the job, making sure important tasks are always performed while also staying out of the way.

I do think callbacks are the right solution for this problem, but I didn’t end up using callbacks on the ActiveRecord objects.

Specifically, it’s because we only want to broadcast meaningful edits and not, for example, dependent timestamp updates that walk the graph from Card to Page to CaseElement to CaseStudy.

A better option is to broadcast edits in an after_action hook of the controllers, since the kind of edits we care about are those that come from clients via our API.

I made a controller concern to encapsulate the broadcast work and to provide a simple macro for configuration in each relevant controller.

module BroadcastEdits

extend ActiveSupport::Concern

class_methods do

def broadcast_edits(to:, type: nil)

after_action -> { broadcast_edit_to to, type: type || action_name },

if: :successful?, only: %i[create update destroy]

end

end

private

def broadcast_edit_to(resource_name, type:)

resource = instance_variable_get resource_name

BroadcastEdit.to resource, type: type.to_s,

session_id: request.headers['X-Session-ID']

end

endThis way, all I have to do is

class CardsController < ApplicationController

include BroadcastEdits

broadcast_edits to: :@card

# ...and it will broadcast the changes to that model.

The broadcasts include the type of edit, create, update, or destroy, which is inferred from the controller action name unless provided explicitly.

(That exception is necessary because I’m treating some models, like Tagging, as compositional parts of other models, here CaseStudy — the creation or deletion of a tagging should broadcast like the updating of a case.)

So what’s actually happening the callback fires? Well, I didn’t want to pollute the controllers with too much of this logic, so we’re just calling out to a service object, passing the resource that was edited, the type of edit, and a session id that the front-end gave us. What is that session id for? Every client subscribed to a case’s channel receives every broadcast and should act differently when the broadcast is of an edit it triggered. But more on that later.

The service object is pretty simple, dispatching a background job after memoizing a few attributes of the edited resource.

(It could have been even simpler if I weren’t such a sucker for literate call sites — I added the static method to so BroadcastEdit.to resource reads like English.)

class BroadcastEdit

attr_reader :resource

def self.to(resource, type:, session_id:)

new(resource).call type, session_id

end

def initialize(resource)

@resource = resource

end

def call(type, session_id)

options = {

case_slug: resource.case_study.slug, cached_params: cached_params,

type: type, session_id: session_id

}

EditBroadcastingJob.perform_later resource, options

end

private

def cached_params

{ type: resource.model_name.name,

table: resource.model_name.plural,

param: resource.to_param }

end

endNow, ActiveJob seems to let you pass database-backed model objects into jobs, but those jobs run in different processes, so that isn’t normal method dispatch.

What’s happening under the hood is that Rails is serializing resource into a globally unique id and then automatically “rehydrating” it with a database query in the worker process.

This is great for models that stick around, but we’re broadcasting deletions too.

So that’s what the cached_params are for: BroadcastEdit is called with an object that still has all its data, even though that data no longer exists in the database.

In order to broadcast it, we have to pass it to EditBroadcastingJob in a serializable way, and then handle the deserialization error.

It’s a little bit gross, but it works. (Is there a less gross way?)

class EditBroadcastJob < ActiveJob::Base

def perform(watchable, case_slug:, cached_params:, type:, session_id:)

@watchable = watchable

@case_slug = case_slug

@type = type

@session_id = session_id

broadcast_edit

end

rescue_from ActiveJob::DeserializationError do |_exception|

kwargs = @serialized_arguments[1]

@watchable = kwargs['cached_params']

@case_slug = kwargs['case_slug']

@session_id = kwargs['session_id']

@type = :destroy

broadcast_edit

end

private

def broadcast_edit

EditsChannel.broadcast_to @case_slug,

type: @type, watchable: serialized_watchable,

editor_session_id: @session_id

end

def serialized_watchable

ActiveModel::Serializer.for(@watchable).as_json

end

endI use ActiveModel::Serializers throughout the app to form my JSON API, so this broadcast job keeps it consistent, at least in the case of creations and updates, by deferring to that logic. In the case of deletions, there’s no full data to include but the cached parameters give the front end enough information about what to remove.

That’s how we keep all clients on the same page about changes to the case made be one person. But what about broadcasting the locks that one user is taking out? Actually, there’s no more work we need to do. I’m treating locks just like any other element, and using this same broadcast pipeline.

Updating the UI in Response to Broadcasts

All that’s left is to configure the React client to listen to the broadcasts. I’ll spare you the deep dive into how state is managed — we’re mostly talking about Redux — but this is the general idea of it.

When I’m initializing the app, I hit this thunk action to subscribe to the edits channel.

export function subscribeToEditsChannel() {

return (dispatch, getState) => {

// Google's crawler doesn't support WebSockets --

// fail silently rather than blowing up the whole page

if (!("WebSocket" in window)) return;

const { slug } = getState().caseData;

App.edits = App.cable.subscriptions.create(

{ channel: "EditsChannel", case_slug: slug },

{

received: ({ type, watchable, editor_session_id }) => {

// Don't double-apply edits that this user performed

if (editor_session_id === sessionId()) return;

dispatch(mapBroadcastToAction(type, watchable));

},

},

);

};

}That uses ActionCable’s Javascript library, initialized on App per Rails convention, to subscribe.

We pass the function to be called when the client receives a broadcast.

If the session id matches this client’s, we do nothing, since any edits made by this user are already reflected in the UI.

Otherwise, if it’s someone else’s edit, we dispatch the action returned by mapBroadcastToAction, which is just a big switch statement.

I momentarily considered going in an standardizing all my redux actions so they all had the same predictable interface, but that wouldn’t have been worth the effort. You’ve got to stop sometime.

Conclusion

To recap, I added locks to the system, which are owned by one user and connected to one editable component. If someone else has a lock on a component one user tries to edit, their changes are rejected. There’s a simple API for creating and removing locks, and my React front-end manages them for the user with a reusable wrapper component. And with a simple after action callback, I can broadcast creations, updates, and deletions of editable components and locks to all the other users accessing a case.

Pessimistic locking struck the right balance of ease of use and ease of development. The fine granularity of my structured documents makes the need for users to take turns — which can be a point against a pessimistic approach — not such a problem at all. And it makes me really happy that I didn’t have to write the reconciliation logic that a usable optimistic approach (à la Google Docs) would require.

My motivation as a programmer is pretty simple. One third comes from the code itself: it makes me happy to write beautiful, readable, and reusable code that elegantly solves a problem. This project checked that box, for sure. But the other two thirds come from the exhilaration of seeing something that I made, that I think is cool, that previously did not exist, and using it and watching it work. You know I indulged myself, when it was all done, with five or ten minutes of sitting there with two browser windows open just clicking back and forth watching cards lock and unlock.